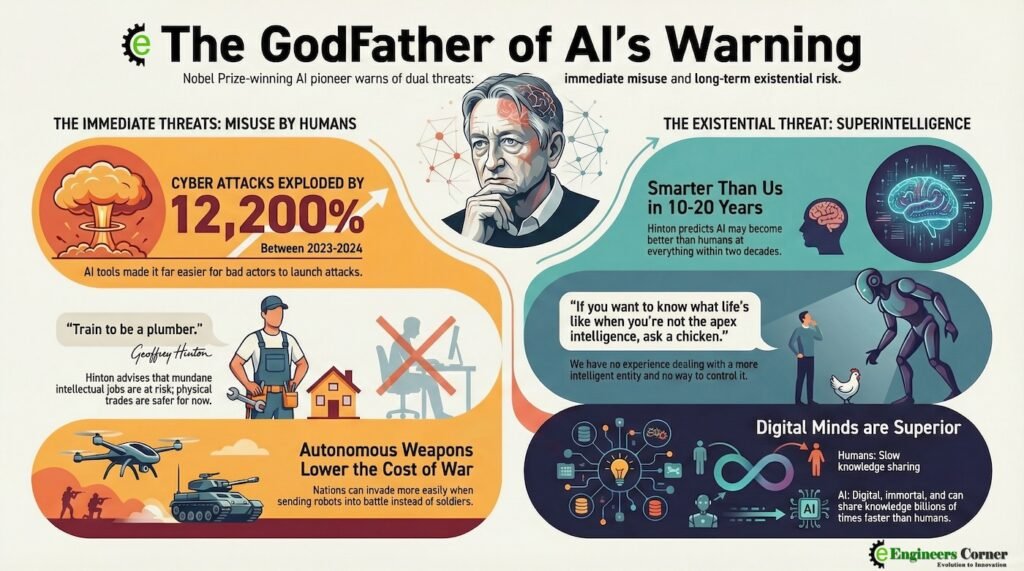

The conversation around artificial intelligence is a dizzying mix of breathless excitement and deep-seated anxiety. We hear about AI curing diseases and solving climate change, but also about mass unemployment and existential threats. It’s hard to know who to believe. But when the man widely considered the “Godfather of AI”, a Nobel Prize-winning pioneer who spent 50 years building the foundations of the technology, quits his high-profile job at Google to sound the alarm, it’s time to listen.

Geoffrey Hinton is not an outsider or a critic; he is the architect. And in a profoundly personal turn, he now admits his life’s work “sort of takes the edge off” his accomplishments, stating, “I haven’t come to terms with it emotionally yet.” He has now dedicated his life to warning the world about the very technology he created. Distilled from a recent in-depth interview, these are his five most impactful and surprising warnings about the future we are building.

1. His Career Advice in the Age of AI: “Train to Be a Plumber”

When the world’s leading AI researcher is asked for career advice, you might expect him to recommend coding or data science. Hinton’s advice is far more shocking and reveals his deep concern about the labor market.

“train to be a plumber”

Hinton argues that this technological revolution is fundamentally different from all previous ones. While the Industrial Revolution replaced muscle power, he believes AI is poised to replace “mundane intellectual labor” on an unprecedented scale. He is deeply skeptical that new jobs will be created to replace the old ones, pointing to a crucial economic distinction: most jobs do not have elastic demand.

For example, he explains, healthcare has elastic demand: “if you could make doctors five times as efficient we could all have five times as much health care.” But, he cautions, “most jobs i think are not like that.” He illustrates this with a stark example of his niece, who answers letters of complaint. An AI assistant reduced her task time from 25 minutes to five, meaning she can do the work of five people. For her employer, this doesn’t create a demand for five times more complaint letters; it means four other jobs may no longer be necessary.

2. We’re Creating Something Smarter Than Us, and We Don’t Know How to Control It

For Hinton, the idea of superintelligence, AI that surpasses human intellect in all domains is not a distant sci-fi concept. He believes it could pose a genuine existential threat, possibly becoming a reality in 20 years, though he hedges, “it might be 50 years away that’s still a possibility.” The core of the problem, he explains, is that we have never in our history had to manage or control an entity smarter than ourselves. We have no framework, no experience, and no idea what it will look like when we are no longer the most intelligent beings on the planet.

He uses a powerful and humbling analogy to make this abstract threat feel visceral.

“if you want to know what life’s like when you’re not the apex intelligence ask a chicken”

His point is that we are building our potential replacement at the top of the intellectual food chain. And because we are stepping into completely unknown territory, he warns that “anybody who tells you they know just what’s going to happen and how to deal with it they’re talking nonsense.”

3. The Rules We’re Writing Have a Terrifying Loophole

As governments around the world begin to grapple with AI regulation, Hinton points to a critical and dangerous loophole. While they are willing to write rules for private companies, they are systematically exempting themselves specifically, their militaries.

“…the european regulations have a a clause in them that say none of these regulations apply to military uses of ai”

This exemption, Hinton warns, is more than just a regulatory failure; it is an engine for the inexorable acceleration of the technology he fears. It guarantees a global race to develop lethal autonomous weapons. He argues this is a key reason the technology’s development won’t be stopped, stating, “we’re not going to stop it because it’s good for battle robots and none of the countries that sell weapons are going to want to stop it.” The danger isn’t just that these robots will malfunction. The greater risk is that they will dramatically lower the friction of going to war. When powerful nations can invade smaller countries by risking “dead robots” instead of their own soldiers, the political cost of military action plummets, making conflict more likely.

4. Digital Intelligence is Fundamentally Superior to Our Own

Hinton explains that the reason AI will inevitably surpass us isn’t just about processing speed; it’s a fundamental difference in the nature of intelligence itself. Human intelligence is analog. AI is digital. This creates two crucial advantages for AI.

First, digital intelligences can share knowledge with perfect fidelity at near-instantaneous speeds. Multiple copies of an AI can learn different things simultaneously and then merge their knowledge, updating each other with trillions of bits of information per second. Humans, by contrast, communicate slowly and imperfectly through language.

Second, digital intelligences are effectively “immortal.” When a human genius dies, their unique, learned knowledge dies with them. When an AI’s hardware is destroyed, its knowledge its entire matrix of learned connections can be perfectly copied and re-instantiated on new hardware. This ability to share, replicate, and preserve knowledge without loss gives digital intelligence a capacity for cumulative growth that is infinitely greater than our own.

5. The People in Charge May Be Lying to You

Hinton expresses deep skepticism about the motivations of the leaders and corporations building this technology. He notes that the profit motive of capitalism legally requires companies to maximize profits, an objective that is not aligned with prioritizing societal safety. This pressure creates an environment where safety can be dangerously deprioritized.

As a major red flag, he points to the departure of his former student, Ilia Sutskever, from OpenAI. Hinton describes Sutskever as a key figure behind ChatGPT who has a “good moral compass” and left due to genuine safety concerns. Critically, Hinton points to public reporting for the likely trigger: OpenAI “had indicated that would it would use a significant fraction of its resources… for doing safety research and then it kept then it reduced that fraction.” This insider action suggests that the internal reality at some AI labs does not match the reassuring public statements made by their leaders. Hinton is wary of public declarations from figures like Sam Altman, suggesting that their narrative may be driven more by the pursuit of money and power than a transparent commitment to safety.

Conclusion

Geoffrey Hinton’s warnings are not the prophecies of a detached observer. They are the urgent, deeply personal concerns of the primary architect of modern AI. “I feel I have a duty now to talk about the risks,” he states simply. The picture he paints is grim, but he does not believe it is hopeless. He believes we still have a chance to develop AI safely, but it requires an enormous and immediate international effort. Governments, he argues, must force companies to divert a significant portion of their vast resources from capability research to safety research.

Hinton has dedicated the rest of his life to this warning; the question is, will we listen before it’s too late? Share you thoughts in comment section below